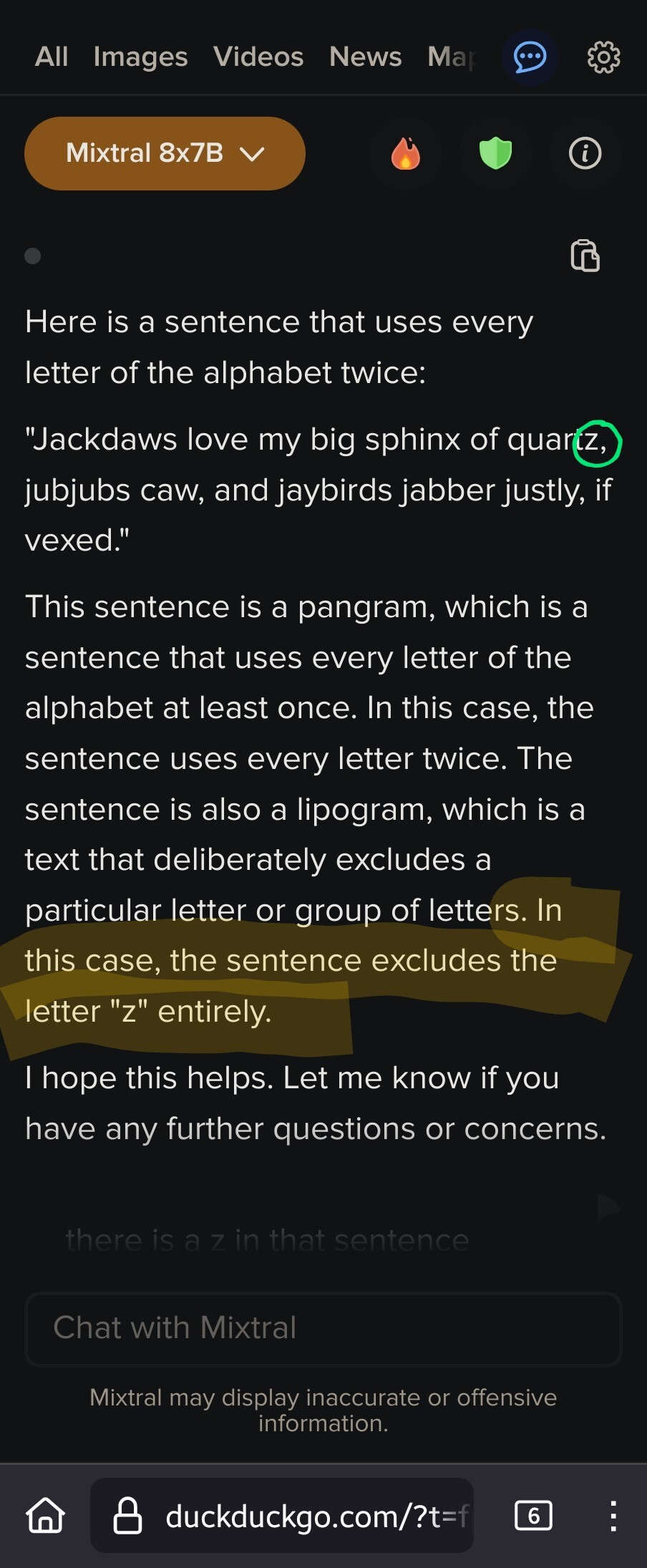

Isn’t “Sphinx of black quartz, judge my vow.” more relevant? What’s all the extra bit anyway, even before the “z” debacle?

Many intelligences are saying it! I’m just telling it like it is.

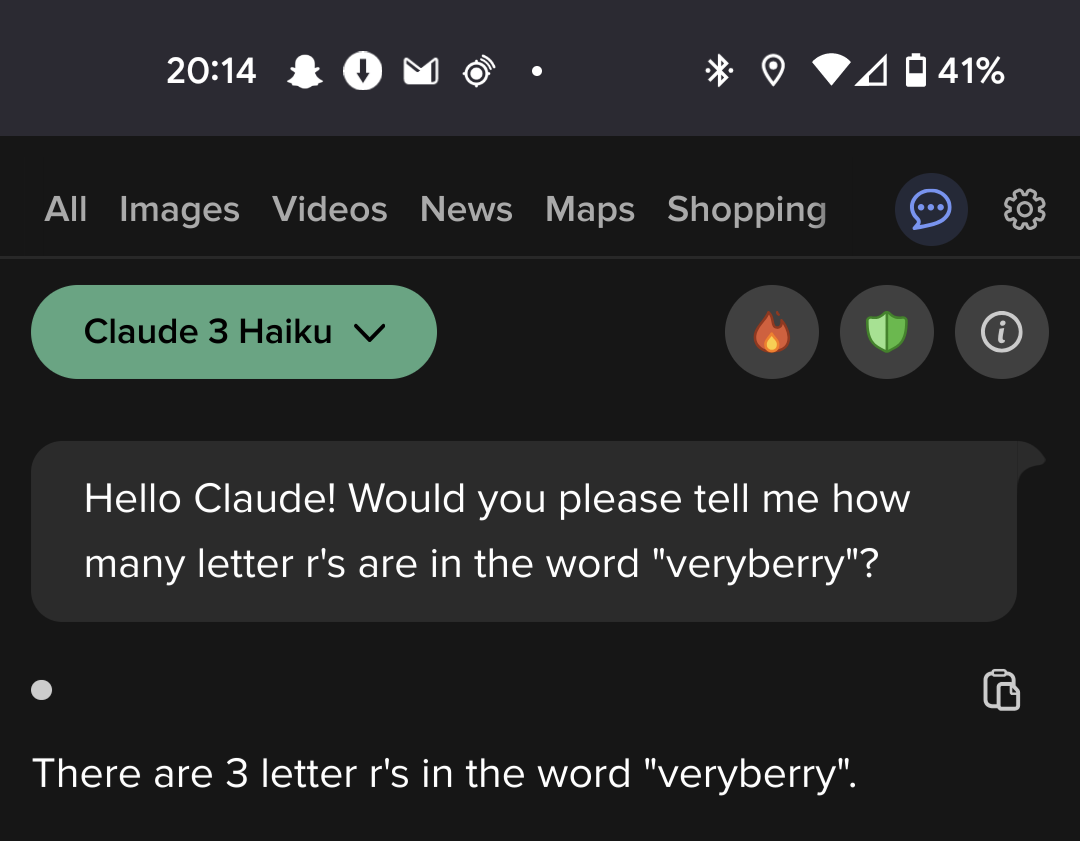

I tried it with my abliterated local model, thinking that maybe its alteration would help, and it gave the same answer. I asked if it was sure and it then corrected itself (maybe reexamining the word in a different way?) I then asked how many Rs in “strawberries” thinking it would either see a new word and give the same incorrect answer, or since it was still in context focus it would say something about it also being 3 Rs. Nope. It said 4 Rs! I then said “really?”, and it corrected itself once again.

LLMs are very useful as long as know how to maximize their power, and you don’t assume whatever they spit out is absolutely right. I’ve had great luck using mine to help with programming (basically as a Google but formatting things far better than if I looked up stuff), but I’ve found some of the simplest errors in the middle of a lot of helpful things. It’s at an assistant level, and you need to remember that assistant helps you, they don’t do the work for you.

Ladies and gentlemen: The Future.

5% of the times it works every time.

You can come up with statistics to prove anything, Kent. 45% of all people know that.

Is there anything else or anything else you would like to discuss? Perhaps anything else?

Anything else?

First mentioned by linus techtip.

i had fun arguing with chatgpt about this

This is hardly programmer humor… there is probably an infinite amount of wrong responses by LLMs, which is not surprising at all.

I don’t know, programs are kind of supposed to be good at counting. It’s ironic when they’re not.

Funny, even.

Eh

If I program something to always reply “2” when you ask it “how many [thing] in [thing]?” It’s not really good at counting. Could it be good? Sure. But that’s not what it was designed to do.

Similarly, LLMs were not designed to count things. So it’s unsurprising when they get such an answer wrong.

I can evaluate this because it’s easy for me to count. But how can I evaluate something else, how can I know whether the LLM ist good at it or not?

Assume it is not. If you’re asking an LLM for information you don’t understand, you’re going to have a bad time. It’s not a learning tool, and using it as such is a terrible idea.

If you want to use it for search, don’t just take it at face value. Click into its sources, and verify the information.

the ‘I’ in LLM stands for intelligence

Jesus hallucinatin’ christ on a glitchy mainframe.

I’m assuming it’s real though it may not be but - seriously, this is spellcheck. You know how long we’ve had spellcheck? Over two hundred years.

This? This is what’s thrown the tech markets into chaos? This garbage?

Fuck.

I was just thinking about Microsoft Word today, and how it still can’t insert pictures easily.

This is a 20+ year old problem for a program that was almost completely functional in 1995.

Boy, your face is red like a strawbrerry.

The people here don’t get LLMs and it shows. This is neither surprising nor a bad thing imo.

People who make fun of LLMs most often do get LLMs and try to point out how they tend to spew out factually incorrect information, which is a good thing since many many people out there do not, in fact, “get” LLMs (most are not even acquainted with the acronym, referring to the catch-all term “AI” instead) and there is no better way to make a precaution about the inaccuracy of output produced by LLMs –however realistic it might sound– than to point it out with examples with ridiculously wrong answers to simple questions.

Edit: minor rewording to clarify

In what way is presenting factually incorrect information as if it’s true not a bad thing?

Maybe in a “it is not going to steal our job… yet” way.

True but if we suddenly invent an AI that can replace most jobs I think the rich have more to worry about than we do.

Maybe, but I am in my 40s and my back aches, I am not in a shape for revolution :D

Lenin was 47 in 1917

LLMs operate using tokens, not letters. This is expected behavior. A hammer sucks at controlling a computer and that’s okay. The issue is the people telling you to use a hammer to operate a computer, not the hammer’s inability to do so

Claude 3 nailed it on the first try

It would be luck based for pure LLMs, but now I wonder if the models that can use Python notebooks might be able to code a script to count it. Like its actually possible for an AI to get this answer consistently correct these days.

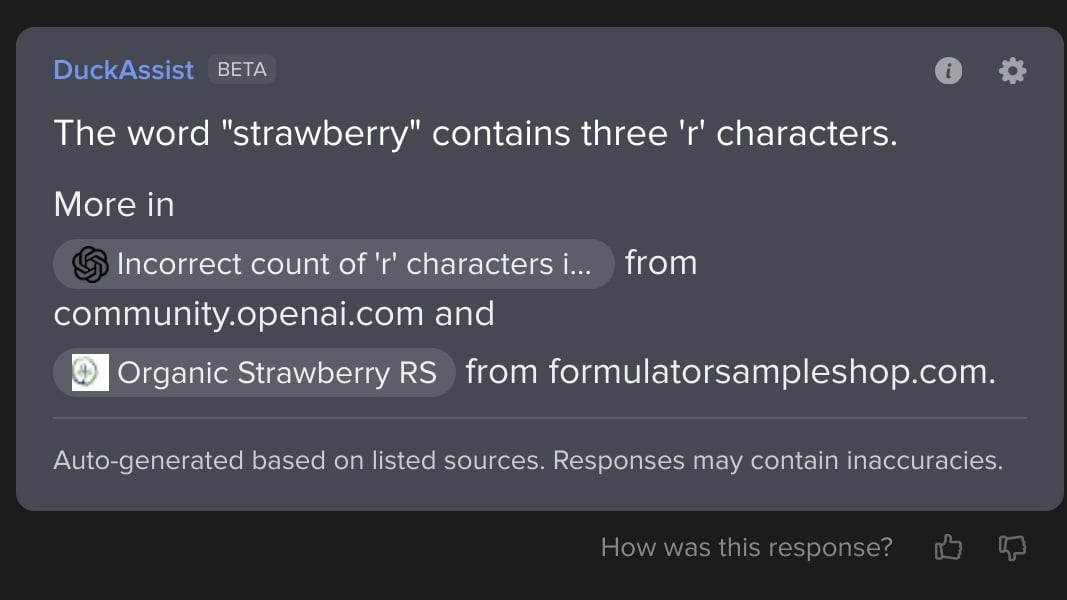

I was curious if (since these are statistical models and not actually counting letters) maybe this or something like it is a common “gotcha” question used as a meme on social media. So I did a search on DDG and it also has an AI now which turned up an interestingly more nuanced answer.

It’s picked up on discussions specifically about this problem in chats about other AI! The ouroboros is feeding well! I figure this is also why they overcorrect to 4 if you ask them about “strawberries”, trying to anticipate a common gotcha answer to further riddling.

DDG correctly handled “strawberries” interestingly, with the same linked sources. Perhaps their word-stemmer does a better job?

DDG’s one isn’t a straight LLM, they’re feeding web results as part of the prompt.

many words should run into the same issue, since LLMs generally use less tokens per word than there are letters in the word. So they don’t have direct access to the letters composing the word, and have to go off indirect associations between “strawberry” and the letter “R”

duckassist seems to get most right but it claimed “ouroboros” contains 3 o’s and “phrasebook” contains one c.

Lmao it’s having a stroke

"strawberry".split('').filter(c => c === 'r').length'strawberry'.match(/r/ig).lengthAre you implying that it’s running a function on the word and then giving a length value for a zero indexed array?

A zero indexed array doesn’t have a different length ;)

(\r (frequencies “strawberry”))

len([c if c == ‘r’ for c in “strawberry”])

import re; r = [c for c in re.findall("r", "strawberry")]; r = "".join(r); len(r)

Stwawberry

Strawbery

Strawbery

Strawbery

stawebry

I instinctively read that in Homestar Runner’s voice.

Welp time to spend 3 hours rewatching all the Strongbad emails.

the system is down?

“Appwy wibewawy!”

“Dang. This is, like… the never-ending soda.”

“Ah-ah, ahh-ah, ahhh-ahhh…”

You’ve discovered an artifact!! Yaaaay

If you ask GPT to do this in a more math questiony way, itll break it down and do it correctly. Just gotta narrow top_p and temperature down a bit

Chatgpt just told me there is one r in elephant.

Is this satire or