I don’t think that casting a range of bits as some other arbitrary type “is a bug nobody sees coming”.

C++ compilers also warn you that this is likely an issue and will fail to compile if configured to do so. But it will let you do it if you really want to.

That’s why I love C++

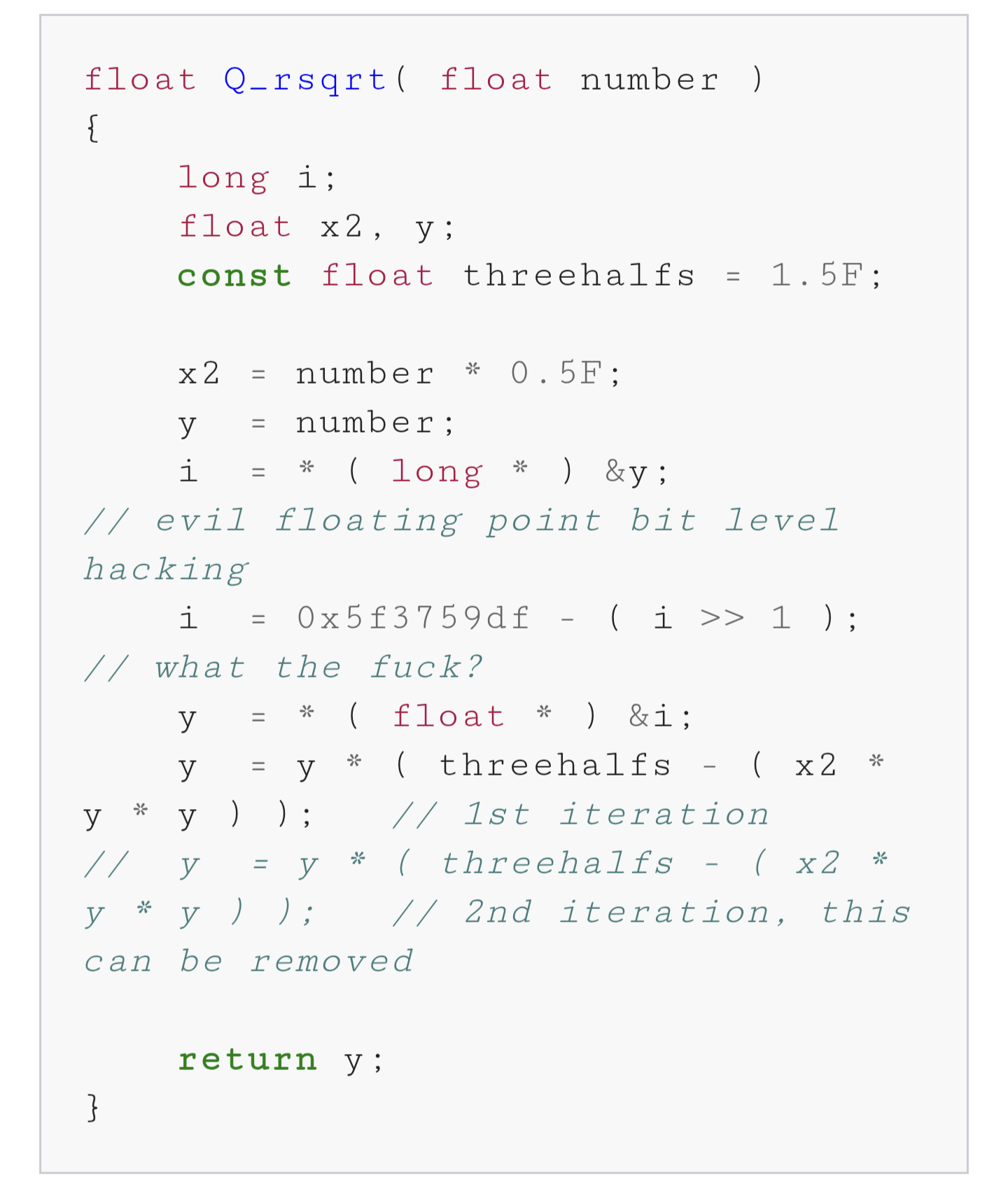

The problem is that it’s undefined behavior. Quake fast inverse square root only works before the types just happen to look that way. Because the floats just happens to have that bit arrangement. It could look very different on other machines! Nevermind that it’s essentially always exactly the same on most architectures. So yeah. Undefined behavior is there to keep your code usable even if our assumptions about types and memory change completely one day.

What do you mean I’m not supposed to add 0x5f3759df to a float casted as a long, bitshifted right by 1?

//what the fuck?They know. It’s a comment from the code.

I don’t know which is worse. Using C++ like lazy C, or using C++ like it was designed to be used.

An acquaintance of mine once wrote a finite element method solver entirely in C++ templates.

Aand what is wrong with that?

Structs with union members that allow the same place in memory to be accessed either word-wise, byte-wise, or even bit-wise are a god-sent for everyone who needs to access IO-spaces, and I’m happy my C-compiler lets me do it.

#pragma push

Context?

You use it to “pack” bitfields, bytes etc together in structs/classes (wo functions), otherwise the computer usually align every variable on a 32bit boundary for speed.

You don’t need that pragma to pack bitfields.

With say a 3bit int, then a 2bit int and various char, int etc and so on you did have to use the pragma with gcc & visual around 2012 at least

OK, I use the Keil ARM compiler, and never needed to push anything.

I’m all for having the ability to do these shenanigans in principle, but prefer if they are guarded in an

unsafeblock.Don’t be average

I used to love C++ until I learned Rust. Now I think it is obnoxious, because even if you write modern C++, without raw pointers, casting and the like, you will be constantly questioning whether you do stuff right. The spec is just way too complicated at this point and it can only get worse, unless they choose to break backwards compatibility and throw out the pre C++11 bullshit

I suppose it’s a matter of experience and practise. The more you wotk with it the better you get. As usual with all things one can learn.

The question becomes, then, if I spend 5 years learning and mastering C++ versus rust, which one is going to help me produce a better product in the end?

Depending on what I’m doing, sometimes rust will annoy me just as much. Often I’m doing something I know is definitely right, but I have to go through so much ceremony to get it to work in rust. The most commonly annoying example I can think of is trying to mutually borrow two distinct fields of a struct at the same time. You can’t do it. It’s the worst.

Why use a strongly typed language at all, then?

Sounds unnecessarily restrictive, right? Just cast whatever as whatever and let future devs sort it out.

$myConstant = ‘15’;

$myOtherConstant = getDateTime();

$buggyShit = $myConstant + $myOtherConstant;Fuck everyone who comes after me for the next 20 years.

No need to cast as any types at all just work with bits directly /s

“C++ compilers also warn you…”

Ok, quick question here for people who work in C++ with other people (not personal projects). How many warnings does the code produce when it’s compiled?

I’ve written a little bit of C++ decades ago, and since then I’ve worked alongside devs who worked on C++ projects. I’ve never seen a codebase that didn’t produce hundreds if not thousands of lines of warnings when compiling.

You shouldn’t have any warnings. They can be totally benign, but when you get used to seeing warnings, you will not see the one that does matter.

I know, that’s why it bothered me that it seemed to be “policy” to just ignore them.

Depends on the age of the codebase, the age of the compiler and the culture of the team.

I’ve arrived into a team with 1000+ warnings, no const correctness (code had been ported from a C codebase) and nothing but C style casts. Within 6 months, we had it all cleaned up but my least favourite memory from that time was “I’ll just make this const correct; ah, right, and then this; and now I have to do this” etc etc. A right pain.

So, did you get it down to 0 warnings and manage to keep it there? Or did it eventually start creeping up again?

I’m not the person you’re asking but surely they just told the compiler to treat warnings as errors after that. No warnings can creep in then!

I mostly see warnings when compiling source code of other projects. If you get a warning as a dev, it’s your responsibility to deal with it. But also your risk, if you don’t. I made it a habit to fix every warning in my own projects. For prototyping I might ignore them temporarily. Some types of warnings are unavoidable sometimes.

If you want to make yourself not ignore warnings, you can compile with

-Werrorif using GCC/G++ to make the compiler a pedantic asshole that doesn’t compile until you fix every fucking warning. Not advisable for drafting code, but definitely if you want to ship it.Except when you have to cast size_t on int and vice versa (for “small” numbers). I hate that warning.

Ideally? Zero. I’m sure some teams require “warnings as errors” as a compiler setting for all work to pass muster.

In reality, there’s going to be odd corner-cases where some non-type-safe stuff is needed, which will make your compiler unhappy. I’ve seen this a bunch in 3rd party library headers, sadly. So it ultimately doesn’t matter how good my code is.

There’s also a shedload of legacy things going on a lot of the time, like having to just let all warnings through because of the handful of places that will never be warning free. IMO its a way better practice to turn a warning off for a specific line.. Sad thing is, it’s newer than C++ itself and is implementation dependent, so it probably doesn’t get used as much.

I’ve seen this a bunch in 3rd party library headers, sadly. So it ultimately doesn’t matter how good my code is.

Yeah, I’ve seen that too. The problem is that once the library starts spitting out warnings it’s hard to spot your own warnings.

Yuuup. Makes me wonder if there’s a viable “diaper pattern” for this kind of thing. I’m sure someone has solved that, just not with the usual old-school packaging tools (e.g. automake).

I work on one of the larger c++ projects out there (20 to 50 million lines range) and though I don’t see the full build logs I’ve yet to see a component that has a warning.

0 in our case, but we are pretty strict. Same at the first place I worked too. Big tech companies.

Ignoring warnings is really not a good way to deal with it. If a compiler is bitching about something there is a reason to.

A lot of times the devs are too overworked or a little underloaded in the supply of fucks to give, so they ignore them.

In some really high quality codebases, they turn on “treat warnings as errors” to ensure better code.

I know that should be the philosophy, but is it? In my experience it seems to be normal to ignore warnings.

A production code should never have any warning left. This is a simple rule that will save a lot of headaches.

Neither should your development code, except for the part where you’re working on.

My team uses the -Werror flag, so our code won’t compile if there are any warnings at all.

I put -Werror at the end of my makefile cflags so it actually treats warnings as errors now.

As it should be. Airbags should go off when you crash, not when you drive near the edge of a cliff.

But it will let you do it if you really want to.

Now, I’ve seen this a couple of times in this post. The idea that the compiler will let you do anything is so bizarre to me. It’s not a matter of being allowed by the software to do anything. The software will do what you goddamn tell it to do, or it gets replaced.

WE’RE the humans, we’re not asking some silicon diodes for permission. What the actual fuck?!? We created the fucking thing to do our bidding, and now we’re all oh pwueez mr computer sir, may I have another ADC EAX, R13? FUCK THAT! Either the computer performs like the tool it is, or it goes the way of broken hammers and lawnmowers!

Soldiers are supposed to question potentially-illegal orders and refuse to execute them if their commanding officer can’t give a good reason why they’re justified. Being in charge doesn’t mean you’re infallible, and there are plenty of mistakes programmers make that the compiler can detect.

I get the analogy, but I don’t think that it’s valid. Soldiers are, much to the chagrin of their commanders, sentient beings, and should question potentially illegal orders.

Where the analogy doesn’t hold is, besides my computer not being sentient, what I’m prevented from doing isn’t against the law of man.

I’m not claiming to be infallible. After all to err is human, and I’m indeed very human. But throw me a warning when I do something that goes against best practices, that’s fine. Whether I deal with it is something for me to decide. But stopping me from doing what I’m trying to do, because it’s potentially problematic? GTFO with that kinda BS.

This comment makes me want to reformat every fucking thing i use and bend it to -my- will like some sort of technomancer

I will botton for my rust compiler, I’m not going to argue with it.

when life gives you restrictive compilers, don’t request permission from them! make life take the compilers back! Get mad! I don’t want your damn restrictive compilers, what the hell am I supposed to do with these? Demand to see life’s manager! Make life rue the day it thought it could give BigDanishGuy restrictive compilers! Do you know who I am? I’m the man who’s gonna burn your house down! With the compilers! I’m gonna get my engineers to invent a combustible compiler that burns your house down!

Ok gramps now take your meds and off you go to the retirement home

Stupid cloud, who’s laughing now?

Yup, I am with you on this one

I understand the idea. But many people have hugely mistaken beliefs about what the C[++] languages are and how they work. When you write ADC EAX, R13 in assembly, that’s it. But C is not a “portable assembler”! It has its own complicated logic. You might think that by writing ++i, you are writing just some INC [i] ot whatnot. You are not. To make a silly example, writing

int i=INT_MAX; ++i;you are not telling the compiler to produce INT_MIN. You are just telling it complete nonsense. And it would be better if the compiler “prevented” you from doing it, forcing you to explain yourself better.I get what you’re saying. I guess what I’m yelling at the clouds about is the common discourse more than anything else.

If a screw has a slotted head, and your screwdriver is a torx, few people would say that the screwdriver won’t allow them to do something.

Computers are just tools, and we’re the ones who created them. We shouldn’t be submissive, we should acknowledge that we have taken the wrong approach at solving something and do it a different way. Just like I would bitch about never having the correct screwdriver handy, and then go look for the right one.

Yeah, but there’s some things computers are genuinely better at than humans, which is why we code in the first place. I totally agree that you shouldn’t be completely controlled by your machine, but strong nudging saves a lot of trouble.

I actually do like that C/C++ let you do this stuff.

Sometimes it’s nice to acknowledge that I’m writing software for a computer and it’s all just bytes. Sometimes I don’t really want to wrestle with the ivory tower of abstract type theory mixed with vague compiler errors, I just want to allocate a block of memory and apply a minimal set rules on top.

100%. In my opinion, the whole “build your program around your model of the world” mantra has caused more harm than good. Lots of “best practices” seem to be accepted without any qualitative measurement to prove it’s actually better. I want to think it’s just the growing pains of a young field.

Even with qualitative measurements they can do stupid things.

For work I have to write code in C# and Microsoft found that null reference exceptions were a common issue. They actually calculated how much these issues cost the industry (some big number) and put a lot of effort into changing the language so there’s a lot of warnings when something is null.

But the end result is people just set things to an empty value instead of leaving it as null to avoid the warnings. And sure great, you don’t have null reference exceptions because a value that defaulted to null didn’t get set. But now you have issues where a value is an empty string when it should have been set.

The exception message would tell you exactly where in the code there’s a mistake, and you’ll immediately know there’s a problem and it’s more likely to be discovered by unit tests or QA. Something that’s an value that’s supposed to be set may not be noticed for a while and is difficult to track down.

So their research indicated a costly issue (which is ultimately a dev making a mistake) and they fixed it by creating an even more costly issue.

There’s always going to be things where it’s the responsibility of the developer to deal with, and there’s no fix for it at the language level. Trying to fix it with language changes can just make things worse.

For this example, I feel that it is actually fairly ergonomic in languages that have an

Optiontype (like Rust), which can either beSomevalue or no value (None), and don’t normally havenullas a concept. It normalizes explicitly dealing with the None instead of havingnullor hidden empty strings and such.I just prefer an exception be thrown if I forget to set something so it’s likely to happen as soon as I test it and will be easy to find where I missed something.

I don’t think a language is going to prevent someone from making a human error when writing code, but it should make it easy to diagnose and fix it when it happens. If you call it null, “”, empty, None, undefined or anything else, it doesn’t change the fact that sometimes the person writing the code just forgot something.

Abstracting away from the problem just makes it more fuzzy on where I just forgot a line of code somewhere. Throwing an exception means I know immediately that I missed something, and also the part of the code where I made the mistake. Trying to eliminate the exception doesn’t actually solve the problem, it just hides the problem and makes it more difficult to track down when someone eventually notices something wasn’t populated.

Sometimes you want the program to fail, and fail fast (while testing) and in a very obvious way. Trying to make the language more “reliable” instead of having the reliability of the software be the responsibility of the developer can mean the software always “works”, but it doesn’t actually do what it’s supposed to do.

Is the software really working if it never throws an exception but doesn’t actually do what it’s supposed to do?

People just think that applying arbitrary rules somehow makes software magically more secure, like with rust, as if the compiler won’t just “let you” do the exact same fucking thing if you type the

unsafekeywordYou don’t even need

unsafe, you can just take user input and execute it in a shell and rust will let you do it. Totally insecure!Rust isn’t memory safe because you can invoke another program that isn’t memory safe?

My comment is sarcastic, obviously. The argument Kairos gave is similar to this. You can still introduce vulnerabilities. The issue is normally that you introduce them accidentally. Rust gives you safety, but does not put your code into a sandbox. It looked to me like they weren’t aware of this difference.

It’s neither arbitrary nor magic; it’s math. And

unsafedoesn’t disable the type system, it just lets you dereference raw pointers.You don’t need

unsafeto write vulnerable code in rust.I don’t know rust, but for example in Swift the type system can make things way more difficult.

Before they added macros if you wanted to write ORM code on a SQL database it was brutal, and if you need to go into raw buffers it’s generally easier to just write C/objc code and a bridging header. The type system can make it harder to reason about performance too because you lose some visibility in what actually gets compiled.

The Swift type system has improved, but I’ve spent a lot of time fighting with it. I just try to avoid generics and type erasure now.

I’ve had similar experiences with Java and Scala.

That’s what I mean about it being nice to drop out of setting up some type hierarchy and interfaces and just working with a raw buffers or function pointers.

Thank you for including the text as text.