A couple of years ago, my friend wanted to learn programming, so I was giving her a hand with resources and reviewing her code. She got to the part on adding code comments, and wrote the now-infamous line,

i = i + 1 #this increments i

We’ve all written superflouous comments, especially as beginners. And it’s not even really funny, but for whatever reason, somehow we both remember this specific line years later and laugh at it together.

Years later (this week), to poke fun, I started writing sillier and sillier ways to increment i:

Beginner level:

# this increments i:

x = i

x = x + int(True)

i = x

Beginner++ level:

# this increments i:

def increment(val):

for i in range(val+1):

output = i + 1

return output

Intermediate level:

# this increments i:

class NumIncrementor:

def __init__(self, initial_num):

self.internal_num = initial_num

def increment_number(self):

incremented_number = 0

# we add 1 each iteration for indexing reasons

for i in list(range(self.internal_num)) + [len(range(self.internal_num))]:

incremented_number = i + 1 # fix obo error by incrementing i. I won't use recursion, I won't use recursion, I won't use recursion

self.internal_num = incremented_number

def get_incremented_number(self):

return self.internal_num

i = input("Enter a number:")

incrementor = NumIncrementor(i)

incrementor.increment_number()

i = incrementor.get_incremented_number()

print(i)

Since I’m obviously very bored, I thought I’d hear your take on the “best” way to increment an int in your language of choice - I don’t think my code is quite expert-level enough. Consider it a sort of advent of code challenge? Any code which does not contain the comment “this increments i:” will produce a compile error and fail to run.

No AI code pls. That’s no fun.

74181

(A + 1)

A0:A3 = (Input Register)

S0:S3 = Low

Mode = Low

CaryN = High

Q1:Q4 = (Output)

https://en.wikipedia.org/wiki/74181

.

Funny enough, it is one of the understood operations that I did not integrate on the truth table on-chip. I had some ideas on extra syntax, but the point is to avoid needing to look at reference docs as much as possible and none of my ideas for this one were intuitive enough this satisfy me.

My favourite one is:

i -=- 1The near symmetry, ah, I see weve found the true Vorin solution.

Upvote for the stormlight archives reference.

The hot dog-operator

This is actually the correct way to do it in JavaScript, especially if the right hand side is more than

1.If JavaScript thinks

icontains a string, and let’s say its value is27,i += 1will result inicontaining271.Subtraction doesn’t have any weird string-versus-number semantics and neither does unary minus, so

i -=- 1guarantees28in this case.For the increment case,

++works properly whether JavaScript thinksiis a string or not, but since the joke is to avoid it, here we are.Every day, JS strays further from gods light :D

The solution is clear: Don’t use any strings

It looks kinda symmetrical, I can dig it!

// this increments i: function increment(val:number): number { for (let i:number = 1; i <= 100; i = i +1) { val = val + 0.01 } return Math.round(val) }let i = 100 i = increment(i) // 101This should get bonus points for incrementing i by 1 as part of the process for incrementing i by 1.

Why not wait for a random bit flip to increment it?

int i = 0; while (i != i + 1); //i is now incrementedbut if

igets randomly bitflipped, wouldn’ti != i+1still be false? It would have to get flipped at exactly the right time, assuming that the cpu requests it from memory twice to run that line? It’d probably be cached anyway.I was thinking you’d need to store the original values, like

x=iandy=i+1andwhile x != yetc… but then what ifxoryget bitflipped? Maybe we hash them and keep checking if the hash is correct. But then the hash itself could get bitflipped…Thinking too many layers of redundancy deep makes my head hurt. I’m sure there’s some interesting data integrity computer science in there somewhere…

That’s a tricky problem, I think you might be able to create a script that increments it recursively.

I’m sure this project that computes Fibonacci recursively spawning several docker containers can be tweaked to do just that.

https://github.com/dgageot/fiboid

I can’t think of a more efficient way to do this.

// this increments i var i = new AtomicInteger(0); i.increment();The best solution for the concurrent and atomic age.

int toIncrement = ...; int result; do { result = randomInt(); } while (result != (toIncrement + 1)); print(result);haha, bogoincrement! I hadn’t thought of that, nice :D

Your intermediate increment looks like serious JavaScript code I’ve seen.

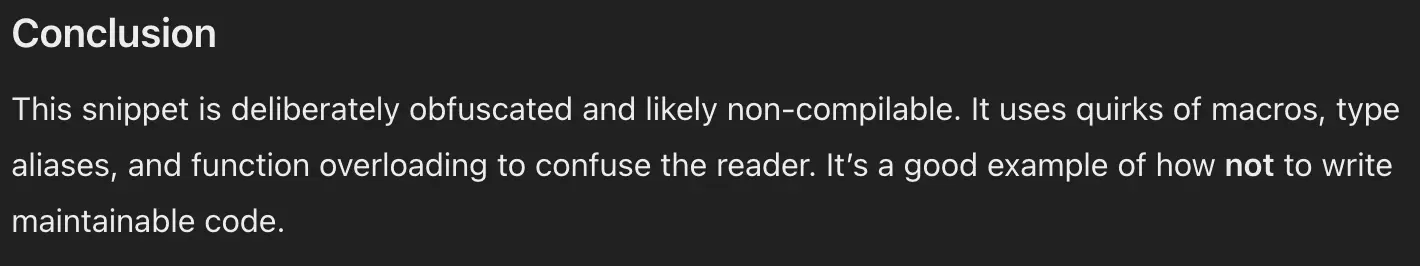

// C++20 #include <concepts> #include <cstdint> template <typename T> concept C = requires (T t) { { b(t) } -> std::same_as<int>; }; char b(bool v) { return char(uintmax_t(v) % 5); } #define Int jnt=i auto b(char v) { return 'int'; } // this increments i: void inc(int& i) { auto Int == 1; using c = decltype(b(jnt)); i += decltype(jnt)(C<decltype(b(c))>); }I’m not quite sure it compiles, I wrote this on my phone and with the sheer amount of landmines here making a mistake is almost inevitable.

I think my eyes are throwing up.

Just surround your eyes with

try {…} catch(Up& up) { }, easy fix

I got gpt to explain this and it really does not like this code haha

It also said multiple times that c++ won’t allow the literal string ‘int’? I would be surprised if that’s true. A quick search has no relevant results so probably not true.

It’s funny that it complains about all of the right stuff (except the ‘int’ thing), but it doesn’t say anything about the concept.

About the ‘int’ literal (which is not a string): cppreference.com has a description on this page about it, ctrl+f “multicharacter literal”.

In c single quotes are for single chars only, while int is a string. That means you would need " around it. I think.

Multiple-character char literals evaluate as int, with implementation defined values - it is extremely unreliable, but that particular piece of code should work.

Typing on mobile please excuse.

i = 0 while i != 1: pass # i is now 1Reminds me of http://www.thecodelesscode.com/case/21

Reminds me of miracle sort.

Ah yes, the wait for a random bit flip to magically increment your counter method. Takes a very long time

The time it takes for the counter to increment due to cosmic rays or background radiation is approximately constant, therefore same order as adding one. Same time complexity.

Constant time solution. Highly efficient.

If you do it on a quantum computer, it goes faster because the random errors pile up quicker.

Finally, a useful real world application for quantum computing!

Unless your machine has error correcting memory. Then it will take literally forever.

++i;boo!

No not bool, int

i = max(sorted(range(val, 0, -1))) + 2Everything is easier in PHP!

<?php /** * This increments $i * * @param int $i The number to increment. * * @return int The incremented number. */ function increment(int $i) { $factor = 1; $adjustment = 0; if ($i < 0) { $factor *= -1; $adjustment = increment(increment($adjustment)); } $i *= $factor; $a = array_fill(1, $i, 'not_i'); if ($i === 0) { array_push($a, 'not_i'); } array_push($a, $i); return array_search($i, $a, true) * $factor + $adjustment; }Let f(x) = 1/((x-1)^(2)). Given an integer n, compute the nth derivative of f as f^((n))(x) = (-1)(n)(n+1)!/((x-1)(n+2)), which lets us write f as the Taylor series about x=0 whose nth coefficient is f^((n))(0)/n! = (-1)^(-2)(n+1)!/n! = n+1. We now compute the nth coefficient with a simple recursion. To show this process works, we make an inductive argument: the 0th coefficient is f(0) = 1, and the nth coefficient is (f(x) - (1 + 2x + 3x^(2) + … + nx(n-1)))/x(n) evaluated at x=0. Note that each coefficient appearing in the previous expression is an integer between 0 and n, so by inductive hypothesis we can represent it by incrementing 0 repeatedly. Unfortunately, the expression we’ve written isn’t well-defined at x=0 since we can’t divide by 0, but as we’d expect, the limit as x->0 is defined and equal to n+1 (exercise: prove this). To compute the limit, we can evaluate at a sufficiently small value of x and argue by monotonicity or squeezing that n+1 is the nearest integer. (exercise: determine an upper bound for |x| that makes this argument work and fill in the details). Finally, evaluate our expression at the appropriate value of x for each k from 1 to n, using each result to compute the next, until we are able to write each coefficient. Evaluate one more time and conclude by rounding to the value of n+1. This increments n.

calm down, mr Knuth

OP asked for code, not a lecture in number theory.

That said, as someone with a degree in math…I gotta respect this.

The argument describes an algorithm that can be translated into code.

1/(1-x)^(2) at 0 is 1 (1/(1-x)^(2) - 1)/x = (1 - 1 + 2x - x^(2))/x = 2 - x at 0 is 2 (1/(1-x)^(2) - 1 - 2x) = ((1 - 1 + 2x - x^(2) - 2x + 4x^(2) - 2x(3))/x(2) = 3 - 2x at 0 is 3

and so on

Create a python file that only contains this function

def increase_by_one(i): # this increments i f=open(__file__).read() st=f[28:-92][0] return i+f.count(st)Then you can import this function and it will raise an index error if the comment is not there, coming close to the most literal way

Any code which does not contain the comment “this increments i:” will produce a compile error and fail to run.

could be interpreted in python