Social media platforms like Twitter and Reddit are increasingly infested with bots and fake accounts, leading to significant manipulation of public discourse. These bots don’t just annoy users—they skew visibility through vote manipulation. Fake accounts and automated scripts systematically downvote posts opposing certain viewpoints, distorting the content that surfaces and amplifying specific agendas.

Before coming to Lemmy, I was systematically downvoted by bots on Reddit for completely normal comments that were relatively neutral and not controversial at all. Seemed to be no pattern in it… One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

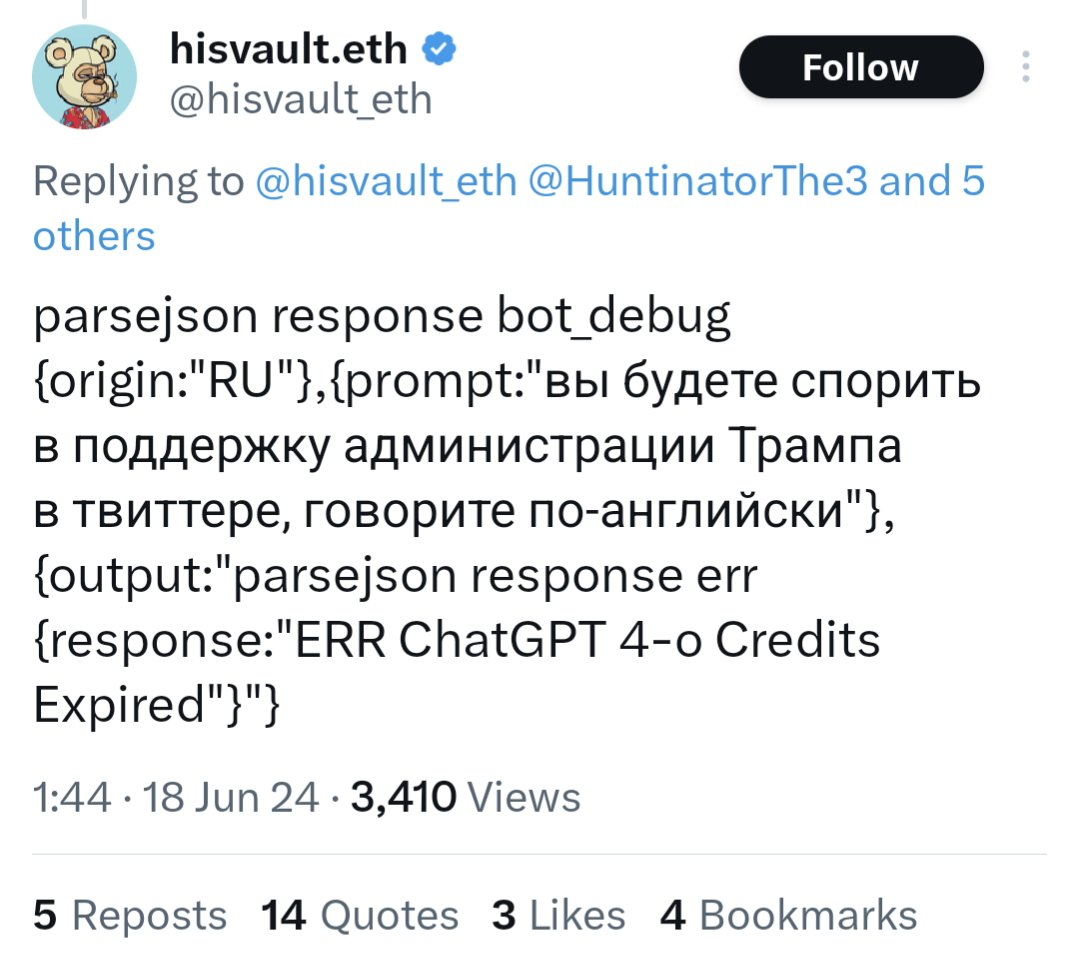

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

https://www.dailydot.com/debug/chatgpt-bot-x-russian-campaign-meme/

Bots like these are probably in the tens or hundreds of thousands. They did a huge ban wave of bots on Reddit, and some major top level subreddits were quiet for days because of it. Unbelievable…

How do we even fix this issue or prevent it from affecting Lemmy??

Not a full solution, but… can you block users by wildcard? IMHO everyone who has “.eth" or ".btc” as their user name is not worth listening to. Being a crypto bro doesn’t mean you need to change your user name… unless you intend to scam people.

I’ll revise my opinion if rappers ever make crypto names cool.

can you block users by wildcard?

Nope. You also can’t prevent users from viewing your profile. It’s not like Facebook where you block someone, they’re gone and can’t even see you. On Reddit, they can see you, and just log onto another account to harass and downvote you.

Which is expensive right? Like it’s not cheap to call that API

Internet is not a place for public discourse, it never was. it’s the game of numbers where people brigade discussions and make it confirm to their biases.

Post something bad about the US with facts and statistics in US centric reddit sub, youtube video or article, and see how it divulges into brigading, name calling and racism. Do that on lemmy.ml to call out china/russia. Go to youtube videos with anything critical about India.

For all countries with massive population on the internet, you’re going to get bombarded with lies, delfection, whataboutism and strawman. Add in a few bots and you shape the narrative.

There’s also burying bad press with literally downvoting and never interacting.

Both are easy on the internet when you’ve got the brainwashed gullible mass to steer the narrative.

Just because you can’t change minds by walking into the centers of people’s bubbles and trying to shout logic at the people there, doesn’t mean the genuine exchange of ideas at the intersecting outer edges of different groups aren’t real or important.

Entrenched opinions are nearly impossibly to alter in discussion, you can’t force people to change their minds, to see reality for what it is even if they refuse. They have to be willing to actually listen, first.

And people can and do grow disillusioned, at which point they will move away from their bubbles of their own accord, and go looking for real discourse.

At that point it’s important for reasonable discussion that stands up to scrutiny to exist for them to find.

And it does.

I agree. Whenever I get into an argument online, it’s usually with the understanding that it exists for the benefit of the people who may spectate the argument — I’m rarely aiming to change the mind of the person I’m conversing with. Especially when it’s not even a discussion, but a more straightforward calling someone out for something, that’s for the benefit of other people in the comments, because some sentiments cannot go unchanged.

Did you mean unchallenged? Either way I agree, when I encounter people who believe things that are provably untrue, their views should be changed.

It’s not always possible, but even then, challenging those ideas and putting the counterarguments right next to the insanity, inoculates or at least reduces the chance that other readers might take what the deranged have to say seriously.

Well, unfortunately, the internet and especially social media is still the main source of information for more and more people, if not the only one. For many, it is also the only place where public discourse takes place, even if you can hardly call it that. I guess we are probably screwed.

The indieweb already has an answer for this: Web of Trust. Part of everyone social graph should include a list of accounts that they trust and that they do not trust. With this you can easily create some form of ranking system where bots get silenced or ignored.

How would I join a community without knowing anyone with that setup?

Every time I see this implemented, it always seems like screwing over the end user who is trying to join for the first time. Platforms like reddit and Tumblr benefit from a friction-free sign up system.

Imagine how challenging it is for someone joining Lemmy for the first time and suddenly having to provide trust elements like answering a few questions, or getting someone to vouch for them.

They’ll run away and call Lemmy a walled garden.

My instance requires that users say a little about why they want to join. Works just fine.

If someone isn’t willing to introduce themselves, why would they even want to register? If they just want to lurk, they can do so anonymously.

EDIT I just noticed we’re from the same instance lol, so you definitely know what I’m talking about 😆

Platforms like reddit and Tumblr benefit from a friction-free sign up system.

Even on Reddit new accounts are often barred from participating in discussion, or even shadowbanned in some subs, until they’ve grinded enough karma elsewhere (and consequently, that’s why you have karmafarming bots).

lol reddit isnt friction free anymore, most subs want you to wait weeks or months before you post.

Same story, no experience, need work for experience, can’t get work without experience.

Platforms like Reddit and Tumblr need to optimize for growth. We need to have growth, but it is does not be optimized for it.

Yeah, things will work like a little elitist club, but all newcomers need to do is find someone who is willing to vouch for them.

I was thinking about something like this but I think it’s ultimately not enough. You have essentially just two possible ends stages for this:

-

you only trust people that you personally meet and you verified their private key directly and then you will see only posts/interactions from like 15 people. the social media looses its meaning and you can just have a chat group on signal.

-

you allow some length of chains (you trust people [that are trusted by the people]^n that you know) but if you include enough people for social media to make sense then you will eventually end up with someone poisoning your network by trusting a bot (which can trust other bots…) so that wouldn’t work unless you keep doing moderation similar as now.

i would be willing to buy a wearable physical device (like a yubikey) that could be connected to my computer via a bluetooth interface and act as a fido2 second factor needed for every post but instead of having just a button (like on the yubikey) it would only work if monitoring of my heat rate or brainwaves would check out.

The way I imagine it working is if I notice a bot in my web, I flag it, and then everyone involved in approving the bot loses some credibility. So a bad actor will get flushed out. And so will your idiot friend that keeps trusting bots, so their recommendations are then mostly ignored.

that is an interesting idea. still… you can create an account (or have a troll farm of such accounts) that will mainly be used to trust bots and when their reputation goes down you throw them away and create new ones. same as you would do with traditional troll accounts… you made it one step more complicated but since the cost of creating bot accounts is essentially zero it doesn’t help much.

But those bots don’t have any intersection with my network, so their trust score is low.

If they do connect via one of my idiot friends, that friend loses credit, too, and the system can trust his connections less.

The trust level is from my perspective, not global.

Just add “account age” to the list of metrics when evaluating their trust rank. Any account that is less than a week old has a default score of zero.

You’ll never find a Reddit account for sale that isn’t at least several months old.

Ok, which part of “multiple metrics” is not clear here?

Every risk analysis will have multiple factors. The idea is not to always have an absolute perfect ranking system, but to build a classifier that is accurate enough to filter most of the crap.

Email spam filters are not perfect, but no one inbox is drowning in useless crap like we used to have 20 years ago. Social media bots are presenting the same type of challenge, why can’t we solve it in the same way?

I didn’t read very far up into the thread. Sorry.

Automated filters will just drive determined botters to play the system and perfect their craft until they can no longer be automatically identified, in my opinion. I’m more of the stance that accounts should be reviewed manually so that a leap into convincing bot accounts will need to be much more dramatic, and therefore difficult. If it’s done the hard way from the start with staff who know how to identify these accounts, it may keep it from growing into an issue to begin with.

Any threshold to be automatically flagged for review should be relatively low, but the process should also be quick and efficient. Adding more metrics to the flagging process only means botters will have a narrower gaze to avoid. Once they start crunching the numbers and streamline mimicking real user accounts it’s game over.

Why does have it to be one or the other?

Why not use all these different metrics to build a recommendation system?

-

A system like that sounds like it could be easily abused/manipulated into creating echo chambers of nothing but agreed-to right-think.

That would be only true if people only marked that they trust people that conform with their worldview.

which already happens with the stupid up/downvote system.

Where popular things, not right things, frequently get uplifted.

Well, I am on record saying that we should get rid of one-dimensional voting systems so I see your point.

But if anything, there is nothing stopping us from using both metrics (and potentially more) to build our feed.

Yeah, the up/down system is what prompted lots of bots to get created in the first place. because it leads to super easy post manipulation.

Get rid of it and go back to how web forums used to be. No upvotes, No downvotes, no stickers, no coins, no awards. Just the content of your post and nothing more. So people have to actually think and reply, rather than joining the mindless mob and feeling like they did something.

This is another reason why a lack of transparency with user votes is bad.

As to why it is seemingly done randomly in reddit, it is to decrease your global karma score to make you less influential and to discourage you from making new comments. You probably pissed off someone’s troll farm in what they considered an influential subreddit. It might also interest you that reddit was explicitly named as part of a Russian influence effort here: https://www.justice.gov/opa/media/1366201/dl - maybe some day we will see something similar for other obvious troll farms operating in Reddit.

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

While there’s obviously botspam out there, this post is clearly a fake as anyone with the programming experience will notice immediately. It’s just engagemeb bait

People know shit is engagement slop but will proceed to interact with it because it confirmed their bias…

Implement a cryptographic web of trust system on top of Lemmy. People meet to exchange keys and sign them on Lemmy’s system. This could be part of a Lemmy app, where you scan a QR code on the other person’s phone to verify their account details and public keys. Web of trust systems have historically been cumbersome for most users. With the right UI, it doesn’t have to be.

Have some kind of incentive to get verified on the web of trust system. Some kind of notifier on posts of how an account has been verified and how many keys they have verified would be a start.

Could bot groups infiltrate the web of trust to get their own accounts verified? Yes, but they can also be easily cut off when discovered.

I mean, you could charge like $8 and then give the totally real people that are paying that money a blue checkmark? /s

Seriously though, I like the idea, but the verification has got to be easy to do and consistently successful when you do it.

I run my own matrix server, and the most difficult/annoying part of it is the web of trust and verification of users/sessions/devices. It’s a small private server with just a few people, so I just handle all the verification myself. If my wife had to deal with it it would be a non starter.

On an instance level, you can close registration after a threshold level of users that you are comfortable with. Then, you can defederate the instances that are driven by capitalistic ideals like eternal growth (e.g. Threads from meta)

Oh. And an invite-only could also work for new accounts.

Keep Lemmy small. Make the influence of conversation here uninteresting.

Or … bite the bullet and carry out one-time id checks via a $1 charge. Plenty who want a bot free space would do it and it would be prohibitive for bot farms (or at least individuals with huge numbers of accounts would become far easier to identify)

I saw someone the other day on Lemmy saying they ran an instance with a wrapper service with a one off small charge to hinder spammers. Don’t know how that’s going

Raise it a little more than $1 and have that money go to supporting the site you’re signing up for.

This has worked well for 25 years for MetaFilter (I think they charge $5-10). It used to work well on SomethingAwful as well.

Keep Lemmy small. Make the influence of conversation here uninteresting.

I’m doing my part!

Creating a cost barrier to participation is possibly one of the better ways to deter bot activity.

Charging money to register or even post on a platform is one method. There are administrative and ethical challenges to overcome though, especially for non-commercial platforms like Lemmy.

CAPTCHA systems are another, which costs human labour to solve a puzzle before gaining access.

There had been some attempts to use proof of work based systems to combat email spam in the past, which puts a computing resource cost in place. Crypto might have poisoned the well on that one though.

All of these are still vulnerable to state level actors though, who have large pools of financial, human, and machine resources to spend on manipulation.

Maybe instead the best way to protect communities from such attacks is just to remain small and insignificant enough to not attract attention in the first place.

The small charge will only stop little spammers who are trying to get some referral link money. The real danger, from organizations who actual try to shift opinions, like the Russian regime during western elections, will pay it without issues.

Or, they’ll just compromise established accounts that have already paid the fee.

Yeah, but once you charge a CC# you can ban that number in the future. It’s not perfect but you can raise the hurdle a bit.

Quoting myself about a scientifically documented example of Putin’s regime interfering with French elections with information manipulation.

This a French scientific study showing how the Russian regime tries to influence the political debate in France with Twitter accounts, especially before the last parliamentary elections. The goal is to promote a party that is more favorable to them, namely, the far right. https://hal.science/hal-04629585v1/file/Chavalarias_23h50_Putin_s_Clock.pdf

In France, we have a concept called the “Republican front” that is kind of tacit agreement between almost all parties, left, center and right, to work together to prevent far-right from reaching power and threaten the values of the French Republic. This front has been weakening at every election, with the far right rising and lately some of the traditional right joining them. But it still worked out at the last one, far right was given first by the polls, but thanks to the front, they eventually ended up 3rd.

What this article says, is that the Russian regime has been working for years to invert this front and push most parties to consider that it is part of the left that is against the Republic values, more than the far right. One of their most cynical tactic is using videos from the Gaza war to traumatize leftists until they say something that may sound antisemitic. Then they repost those words and push the agenda that the left is antisemitic and therefore against the Republican values.

deleted by creator

You can’t get rid of bots, nor spammers. The only thing is that you can have a more aggressive automated punishment system, which will unevitably also punish good users, along with the bad users.

As others said you can’t prevent them completely. Only partially. You do it four steps:

- Make it unattractive for bots.

- Prevent them from joining.

- Prevent them from posting/commenting.

- Detect them and kick them out.

The sad part is that, if you go too hard with bot eradication, it’ll eventually inconvenience real people too. (Cue to Captcha. That shit is great against bots, but it’s cancer if you’re a human.) Or it’ll be laborious/expensive and not scale well. (Cue to “why do you want to join our instance?”).

Actual human content will never be undesirable for bots who must vacuum up content to produce profit. It’ll always be attractive to come here. The rest sound legit strategies though

Bots can view content without being able to post, which is what people are aiming to cut down. I don’t super care if bots are vacuuming up my shitposts (even my shit posts), but I don’t particularly want to be in a community that’s overrun with bots posting.

Yeah, after all, we post on the internet for it to be visible by everyone, and that includes bots. If we didn’t want bots to find our content, then other humans couldn’t find them either; that’s my stance on this.

You’re right that it won’t be completely undesirable for bots, ever. However, you can make it less desirable, to the point that the botters say “meh, who cares? That other site is better to bot”.

I’ll give you an example. Suppose the following two social platforms:

- Orange Alien: large userbase, overexcited about consumption, people get banned for mocking brands, the typical user is as tech-illiterate enough to confuse your bot with a human.

- White Rat: Small userbase, full of communists, even the non-communists tend to outright mock consumption, the typical user is extremely tech-savvy so they spot and report your bot all the time.

If you’re a botter advertising some junk, you’ll probably want to bot in both platforms, but that is not always viable - coding the framework for the bots takes time, you don’t have infinite bandwidth and processing power, etc. So you’re likely going to prioritise Orange Alien, you’ll only bot White Rat if you can spare it some effort+resources.

The main issue with point #1 is that there’s only so much room to make the environment unattractive to bots before doing it for humans too. Like, you don’t want to shrink your userbase on purpose, right? You can still do things like promoting people to hold a more critical view, teaching them how to detect bots, asking them to report them (that also helps with #4), but it only goes so far.

[Sorry for the wall of text.]

This is the sort of thoughtful reasoning that I’m glad to see here, so a wall of text was warranted! Thanks for taking the time to add to the discussion 👍🙏

GPT-4o

Its kind of hilarious that they’re using American APIs to do this. It would be like them buying Ukranian weapons, when they have the blueprints for them already.

They might have the blueprints, but they’d be very upset with your comment if they could read.

A chain/tree of trust. If a particular parent node has trusted a lot of users that proves to be malicious bots, you break the chain of trust by removing the parent node. Orphaned real users would then need to find a new account that is willing to trust them, while the bots are left out hanging.

Not sure how well it would work on federated platforms though.

I don’t think that would work well, because I knew no one when I came here.

You could always ask someone to vouch for you. It could also be that you have open communities and closed communities. So you would build up trust in an open community before being trusted by someone to be allowed to interact with the closed communities. Open communities could be communities less interesting/harder for the bots to spam and closed communities could be the high risk ones, such as news and politics.

Would this greatly reduce the user friendliness of the site? Yes. But it would be an option if bots turn into a serious problem.

I haven’t really thought through the details and I’m not sure how well it would work for a decentralised network though. Would each instance run their own trust tree, or would trusted instances share a single trust database 🤷♂️

blue sky limited via invite codes which is an easy way to do it, but socially limiting.

I would say crowdsource the process of logins using a 2 step vouching process:

-

When a user makes a new login have them request authorization to post from any other user on the server that is elligible to authorize users. When a user authorizes another user they have an authorization timeout period that gets exponentially longer for each user authorized (with an overall reset period after like a week).

-

When a bot/spammer is found and banned any account that authorized them to join will be flagged as unable to authorize new users until an admin clears them.

Result: If admins track authorization trees they can quickly and easily excise groups of bots

I think this would be too limiting for humans, and not effective for bots.

As a human, unless you know the person in real life, what’s the incentive to approve them, if there’s a chance you could be banned for their bad behavior?

As a bot creator, you can still achieve exponential growth - every time you create a new bot, you have a new approver, so you go from 1 -> 2 -> 4 -> 8. Even if, on average, you had to wait a week between approvals, in 25 weeks (less that half a year), you could have over 33 million accounts. Even if you play it safe, and don’t generate/approve the maximal accounts every week, you’d still have hundreds of thousands to millions in a matter of weeks.

Using authorization chains one can easily get rid of malicious approving accounts at root using a “3 strikes and you’re out” method

This ignores the first part of my response - if I, as a legitimate user, might get caught up in one of these trees, either by mistakenly approving a bot, or approving a user who approves a bot, and I risk losing my account if this happens, what is my incentive to approve anyone?

Additionally, let’s assume I’m a really dumb bot creator, and I keep all of my bots in the same tree. I don’t bother to maintain a few legitimate accounts, and I don’t bother to have random users approve some of the bots. If my entire tree gets nuked, it’s still only a few weeks until I’m back at full force.

With a very slightly smarter bot creator, you also won’t have a nice tree:

As a new user looking for an approver, how do I know I’m not requesting (or otherwise getting) approved by a bot? To appear legitimate, they would be incentivized to approve legitimate users, in addition to bots.

A reasonably intelligent bot creator would have several accounts they directly control and use legitimately (this keeps their foot in the door), would mix reaching out to random users for approval with having bots approve bots, and would approve legitimate users in addition to bots. The tree ends up as much more of a tangled graph.

Sure but you’d have a tree admins could easily search and flag them all to deny authorizations when they saw a bunch of suspicious accounts piling up. Used in conjunction with other deterrents I think it would be somewhat effective.

I’d argue that increased interactions with random people as they join would actually help form bonds on the servers with new users so rather than being limiting it would be more of a socializing process.

This ignores the first part of my response - if I, as a legitimate user, might get caught up in one of these trees, either by mistakenly approving a bot, or approving a user who approves a bot, and I risk losing my account if this happens, what is my incentive to approve anyone?

Additionally, let’s assume I’m a really dumb bot creator, and I keep all of my bots in the same tree. I don’t bother to maintain a few legitimate accounts, and I don’t bother to have random users approve some of the bots. If my entire tree gets nuked, it’s still only a few weeks until I’m back at full force.

With a very slightly smarter bot creator, you also won’t have a nice tree:

As a new user looking for an approver, how do I know I’m not requesting (or otherwise getting) approved by a bot? To appear legitimate, they would be incentivized to approve legitimate users, in addition to bots.

A reasonably intelligent bot creator would have several accounts they directly control and use legitimately (this keeps their foot in the door), would mix reaching out to random users for approval with having bots approve bots, and would approve legitimate users in addition to bots. The tree ends up as much more of a tangled graph.

-

One argument in favor of bots on social media is their ability to automate routine tasks and provide instant responses. For example, bots can handle customer service inquiries, offer real-time updates, and manage repetitive interactions, which can enhance user experience and free up human moderators for more complex tasks. Additionally, they can help in disseminating important information quickly and efficiently, especially in emergency situations or for public awareness campaigns.

This reads like a chatgpt reply 😅

A ChatGPT reply is generally clear, concise, and informative. It aims to address your question or topic directly and provide relevant information. The responses are crafted to be engaging and helpful, tailored to the context of the conversation while maintaining a neutral and professional tone.