Code analysis firm sees no major benefits from AI dev tool when measuring key programming metrics, though others report incremental gains from coding copilots with emphasis on code review.

I believe accessibility is the part that makes LLMs helpful, when they are given an easy enough task to verify. Being able to ask a thing that resembles a human what you need instead of reading through possibly a textbook worth of documentation to figure out what is available and making it fit what you need is fairly powerful.

If it were actually capable of reasoning, I’d compare it to asking a linguist the origin of a word vs looking it up in a dictionary. I don’t think anyone disagrees that the dictionary would be more likely to be fully accurate, and also I personally would just prefer to ask the person who seemingly knows and, if I have reason to doubt, then go back and double-check.

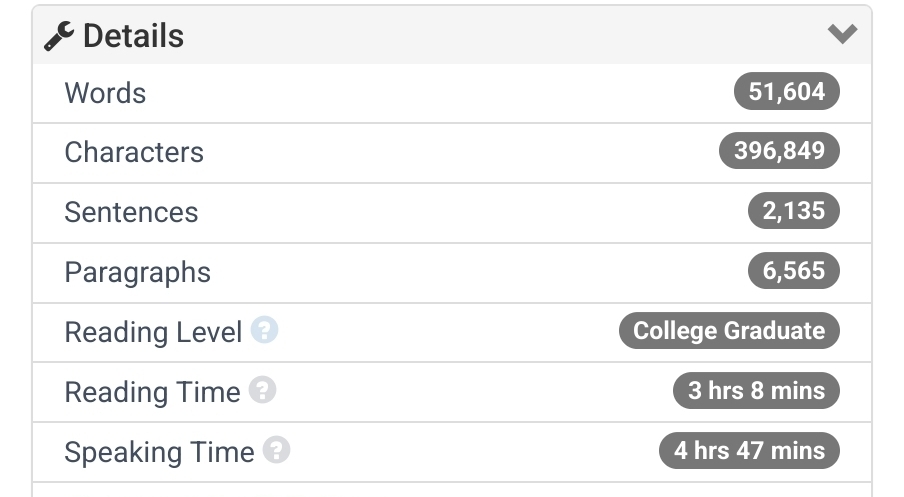

Here’s the manpage for bash’s statistics from wordcounter.net:

Perhaps LLMs can be used to gain some working vocabulary in a subject you aren’t familiar with. I’d say anything more than that is a gamble, since there’s no guarantee that hallucinations have not taken place. Remember, that to spot incorrect info, you need to already be well acquainted with the matter at hand, which is at the polar opposite of just starting to learn the basics.

I believe accessibility is the part that makes LLMs helpful, when they are given an easy enough task to verify. Being able to ask a thing that resembles a human what you need instead of reading through possibly a textbook worth of documentation to figure out what is available and making it fit what you need is fairly powerful.

If it were actually capable of reasoning, I’d compare it to asking a linguist the origin of a word vs looking it up in a dictionary. I don’t think anyone disagrees that the dictionary would be more likely to be fully accurate, and also I personally would just prefer to ask the person who seemingly knows and, if I have reason to doubt, then go back and double-check.

Here’s the manpage for bash’s statistics from wordcounter.net:

Perhaps LLMs can be used to gain some working vocabulary in a subject you aren’t familiar with. I’d say anything more than that is a gamble, since there’s no guarantee that hallucinations have not taken place. Remember, that to spot incorrect info, you need to already be well acquainted with the matter at hand, which is at the polar opposite of just starting to learn the basics.