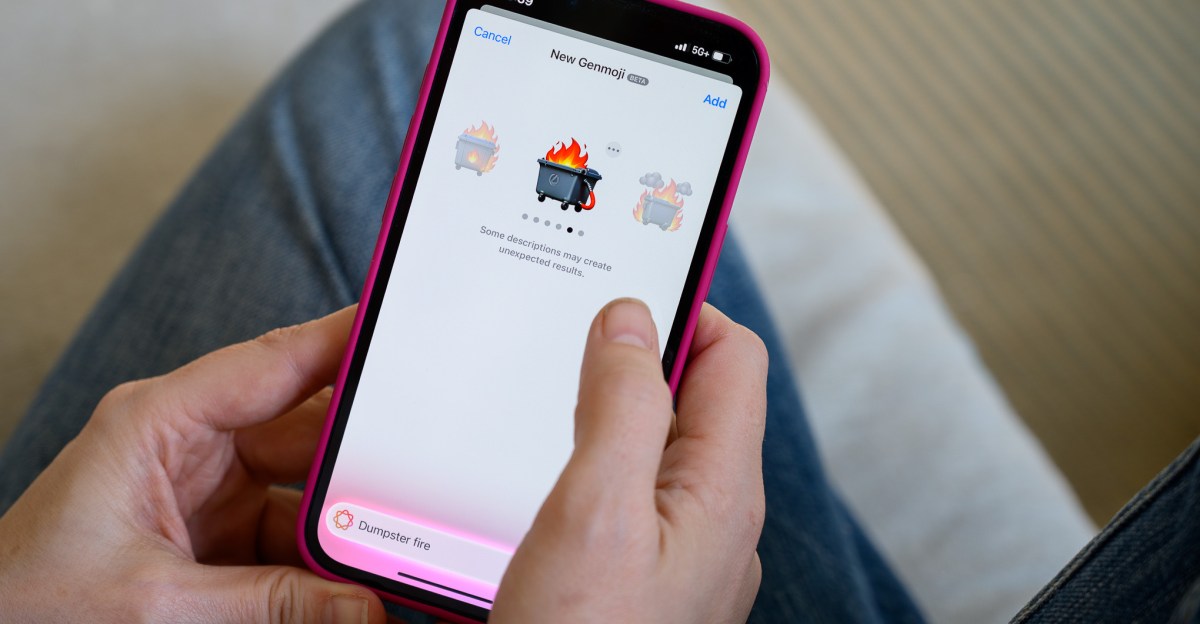

- Apple’s progress with Siri and artificial intelligence has been slow, and features promised in June remain delayed.

- At a Siri team meeting, senior director Robby Walker acknowledged the frustration within the team, describing the delays as “ugly.”

- Features like Siri understanding personal context and taking action based on a user’s screen are still not ready and may not make it into iOS 19.

- Challenges include quality issues that caused these features to malfunction up to a third of the time and conflicts with Apple’s marketing division over showcasing incomplete features.

- Apple has withdrawn related advertisements and added disclaimers on its website, citing extended development times.

- Senior executives, including Craig Federighi and John Giannandrea, are reportedly taking personal accountability for the delays.

- Walker emphasized that the team’s work is impressive and that the delayed features will be released once they meet Apple’s standards.

We just had a town hall with our CEO and they came right out and said we need to simultaneously add AI and not add AI to our products, because customers are both excited and nervous about it. Our competitors are putting “AI” everywhere in their marketing, while we’re just trucking along making a quality product.

Our software works in a very dangerous environment, where mistakes could cost millions in damage and potentially risk human lives. So the end user just sees “AI” as a liability. But the decision makers as to what product to use are removed from conditions on the ground and respond well to marketing BS.

We actually do use AI with some parts of the product (e.g. curve fitting on past data for better predictions), but we need to be very careful about how we advertise that.

It’s dumb. Just pick the product based on what fits your operations best, don’t pick based on buzzwords…

I mean attorneys don’t seem to have a problem submitting case law that AI hallucinated.