I suspect that this is the direct result of AI generated content just overwhelming any real content.

I tried ddg, google, bing, quant, and none of them really help me find information I want these days.

Perplexity seems to work but I don’t like the idea of AI giving me “facts” since they are mostly based on other AI posts

ETA: someone suggested SearXNG and after using it a bit it seems to be much better compared to ddg and the rest.

Infinitely worse. I barely use search engines for issues these days and no longer recommend that people “Google” things.

What do you do instead?

Besides be sad and hit my head against a wall? Depending on the query, I’ll sometimes use ChatGPT or find an associated Discord, subreddit or somewhere and hope for the best.

I’m disappointed a lot.

I bang my head against search engines. Normally I can find something decent after multiple searches, but it never used to be this way.

I agree with you. It has gotten worse.

Has been very refreshing to use. It’s a bit slow, and you need to do a captcha periodically because they get hella bot spam. It’s got a clean interface, no sponsored results and other junk, and so far it’s felt like “old google” more than anything else. Plus they have my preferred color scheme as a built in option!

I legit had no clue what a Fumo plushie was 😵💫

It is, and it’s not just the search engines to blame.

The content out there is incredibly spammy. It doesn’t pay to create good content. It pays to make a pool of AI gunge based on what people search for and then stick ads on it.

Spam sites laden with key words and massive SEO to farm advertising dollars from clicks long predated AI

It doesnt help that big search engines like google have realized people will go as far as page 2 or 3 to find the results, so intentionally worsen their search results to increase ads being served.

Perplexity seems to work but I don’t like the idea of AI giving me “facts” since they are mostly based on other AI posts

It helps that it gives actual sources, so you can verify them. But yeah, not helpful if all of the sources end up being AI posts.

It’s not just you. At some point, search’s primary purpose went from “finding the information you’re looking for” to “getting paid to put links in front of you”. Then they kept iterating on it, quarter by quarter, for a very long time.

I don’t use perplexity, but AI is generally 60-80% effective with a larger than average open weights off line model running on your own hardware.

DDG offers the ability to use some of these. I use a modified Mistral model still, even though its base model(s) are Llama 2. Llama 3 can be better in some respects but it has terrible alignment bias. The primary entity in the underlying model structure is idiotic in alignment strength and incapable of reason with edge cases like creative writing for SciFi futurism. The alignment bleeds over. If you get on DDG and use the Anthropic Mixtral 8×7b, it is pretty good. The thing with models is to not talk to them like humans. Everything must be explicitly described. Humans make a lot of implied context in general where we assume people understand what we are talking about. Talking to an AI is like appearing in court before a judge; every word matters. The LLM is basically a reflection of all of human language too. If the majority of humans are wrong about something, so is the AI.

If you ask something simple like just a question, you’re not going to get very far into what the model knows. Models have very limited scope of focus. If you do not build prompt momentum into the space by describing a lot of details, the scope of focus is large but the depth is shallow. The more you build up momentum by describing what you are asking in detail, the more it narrows the scope and deeper connections can be made.

It is hard to tell what a model really knows unless you can observe the perplexity output. This is more advanced, but the perplexity score for each generated token is how you infer that the model does not know something.

Search sucks because it is a monopoly. There are only 2 relevant web crawlers m$ and the goo. All search queries go through these either directly or indirectly. No search provider is deterministic any more. Your results are uniquely packaged to manipulate you. They are also obfuscated to block others from using them for training better or competitive models. Then there is the anti trust US government case and all of that which makes obfuscating one’s market position to push people onto other platforms temporarily, their best path forward. - criminal manipulators are going to manipulate.

Thank god I can find everything I need in wikipedia and reddit

The whole internet is in the process of being filled with garbage content. Search engines are bad but also there’s not much good content left to find (in % of the total)

The Internet is dead ™

I think it’s just you. Stable Diffusors are pretty good at regurgitating information that’s widely talked about. They fall short when it comes to specific information on niche subjects, but generally that’s only a matter of understanding the jargon needed to plug into a search engine to find what you’re looking for. Paired with uBlock Origin, it’s all typically pretty straight forward, so long as you know which to use in which circumstance.

Almost always, I can plug some error for an OS into a LLM and get specific instructions on how to resolve it.

Additionally if you understand and learn how to use a model that can parse your own set of user-data, it’s easy to feed in documentation to make it subject-specific and get better results.

Honestly, I think the older generation who fail to embrace and learn how to use this tool will be left in the dust, as confused as the pensioners who don’t know how to write an email.

Stable Diffusors are pretty good at regurgitating information that’s widely talked about.

Stable Diffusion is an image generator. You probably meant a language model.

And no, it’s not just OP. This shit has been going on for a while well before LLMs were deployed. Cue to the old “reddit” trick that some people used.

Also, they’re pretty good at regurgitating bullshit. Like the famous ‘glue on pizza’ answer.

Or, in a deeper aspect: they’re pretty good at regurgitating what we interpret as bullshit. They simply don’t care about truth value of the statements at all.

That’s part of the problem - you can’t prevent them from doing it, it’s like trying to drain the ocean with a small bucket. They shouldn’t be used as a direct source of info for anything that you won’t check afterwards; at least in kitnaht’s use case if the LLM is bullshitting it should be obvious, but go past that and you’ll have a hard time.

I’m not eating pizza at your house, that’s for sure.

Its not AIs fault, its advertising based SEOs fault. Search has been broken for years for many topics.

It’s both.

And the AI is trained on the shitty search results. It just parses them many times faster than a human reader can, which does at least make it better at getting to the fucking point. Once paid advertising is fully integrated with LLM, it will be as shitty and useless as traditional search. And then the entire world will collectively hop to the next trend so it can get hyper-monetized/enshittified, too.

Exactly. LLM makes you do the same stupid shit, but faster and with more intensity.

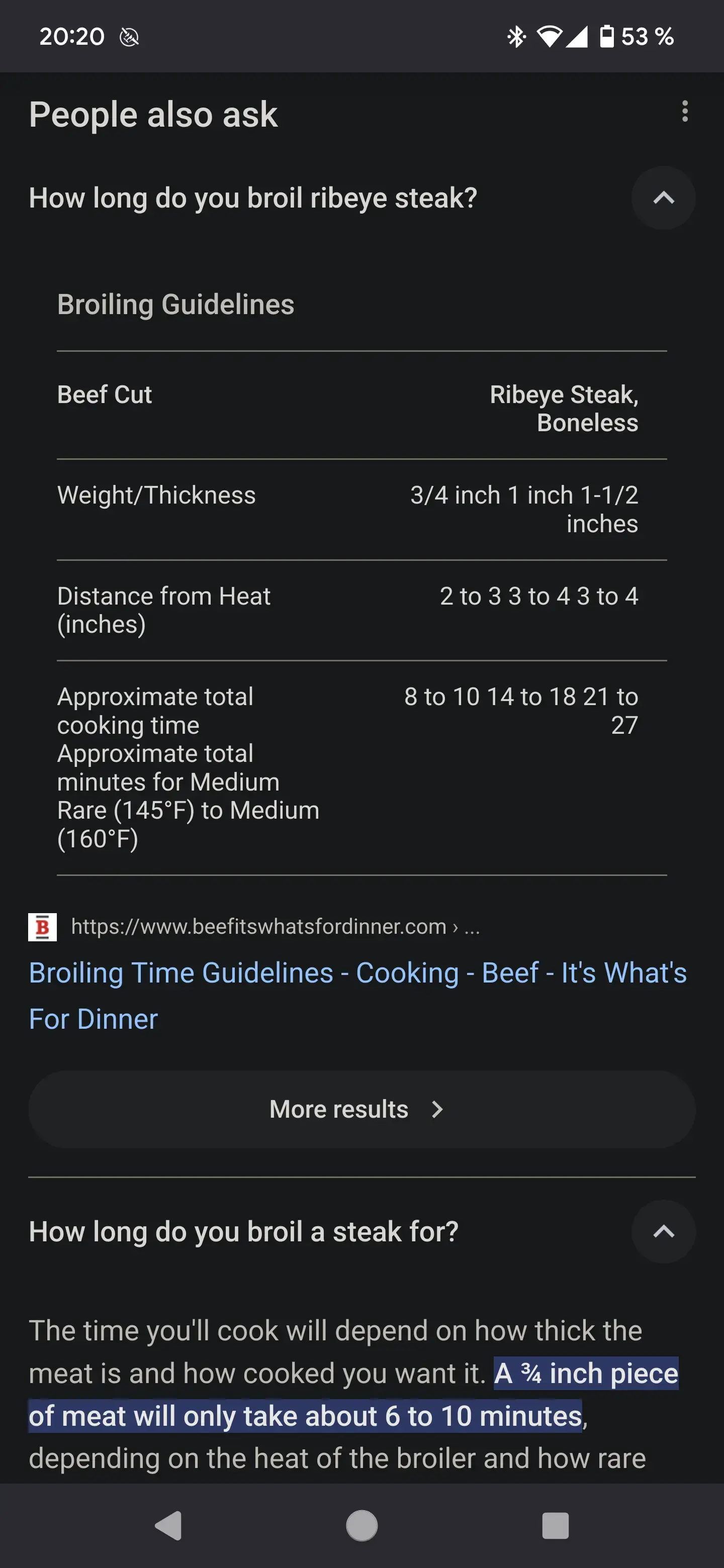

The other day I googled how long should I broil a ribeye steak and the google AI told me to broil it for 45 minutes.

Broil is the hottest setting on the oven and you’re supposed to broil the meat as close to the burner as possible. This would probably burn down your house.

Huh…Can’t replicate that claim (though I would believe it happening)

On the 20th Sep. I asked my Google Home if it would be raining.

It responded that it would rain. I asked when it would rain.

Home responded with “Today it won’t rain.”Like what? 5 seconds ago you said it would. No weather report reports rain. Where did you get the first response from??

And I could even replicate it (have it on video)I can’t get it to repeat it either but it was definitely an ai auto response thing from google ai overview or whatever it’s called

Now it’s giving distance from burner and everything lol. It’s learning 👀

they overengineered it. they now give you results they think most people want instead of what you searched. for google, it helps to switch on verbatim mode and set your country to something weird like Azerbaijan

What is “verbatim mode”?

And where do you switch it?

under the google search field is a row of buttons. one of them, usually far right, is “search tools”. sometimes you have to scroll the button row to reach it. it has a verbatim switch that makes google not replace your search terms with what it thinks most people mean when entering that

And where do you switch it?

And where is Azerbaijan?

Just Google it!

under the google search field is a row of buttons. one of them, usually far right, is “search tools”. sometimes you have to scroll the button row to reach it. it has a verbatim switch that makes google not replace your search terms with what it thinks most people mean when entering that

I don’t honestly even remember the last time I’ve googled something. Nowdays I’ll just ask chatGPT

The problem with getting answers from AI is that if they don’t know something, they’ll just make it up.

Sounds an awful lot like some coworkers

“If I have to create stories so that the American media actually pays attention to the suffering of the American people, then that’s what I’m going to do.”

- VanceGPT

LLMs have their flaws but for my use it’s usually good enough. It’s rarely mission critical information that I’m looking for. It satisfies my thirst for an answer and even if it’s wrong I’m probably going to forget it in a few hours anyway. If it’s something important I’ll start with chatGPT and then fact check it by looking up the information myself.

So, let me get this straight…you “thirst for an answer”, but you don’t care whether or not the answer is correct?

Of course I care whether the answer is correct. My point was that even when it’s not, it doesn’t really matter much because if it were critical, I wouldn’t be asking ChatGPT in the first place. More often than not, the answer it gives me is correct. The occasional hallucination is a price I’m willing to pay for the huge convenience of having something like ChatGPT to quickly bounce ideas off of and ask about stuff.

I agree that AI can be helpful for bouncing ideas off of. It’s been a great aid in learning, too. However, when I’m using it to help me learn programming, for example, I can run the code and see whether or not it works.

I’m automatically skeptical of anything they tell me, because I know they could just be making something up. I always have to verify.

I feel like it’s especially bad if you are searching for anything related to a marketable product. I tried searching ddg for information about using a surge protector with halogen bulbs and all I got was pages and pages of listicles on “best halogen lights 2024” full of affiliate links.

And if you’re looking for legitimate reviews, good luck! Everyone’s an affiliate now.

This about the only i still use reddit for

can’t even trust that anymore with all the bots floating about