- cross-posted to:

- comicstrips@lemmy.world

- cross-posted to:

- comicstrips@lemmy.world

Cross posted from: https://lemm.ee/post/35627632

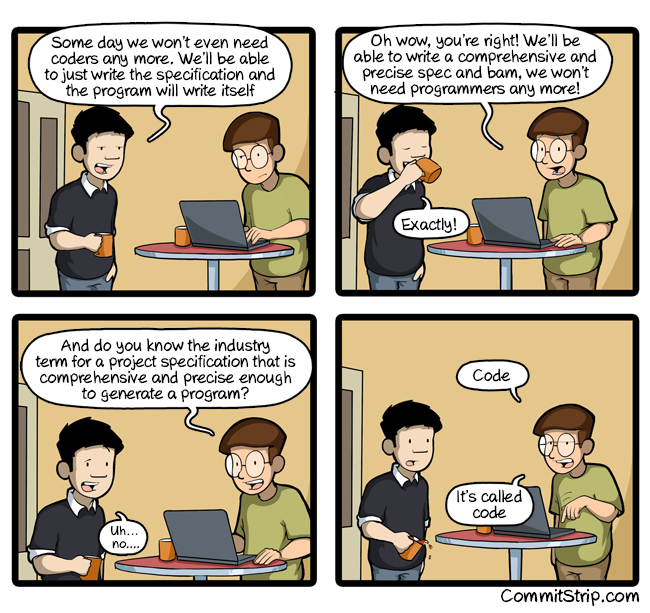

Except AI doesn’t say “Is this it?”

It says, “This is it.”

Without hesitation and while showing you a picture of a dog labeled cat.

Code is the most in depth spec one can provide. Maybe someday we’ll be able to iterate just by verbally communicating and saying “no like this”, but it doesn’t seem like we’re quite there yet. But also, will that be productive?

This is the experience of a senior developer using genai. A junior or non-dev might not leave the “AI is magic” high until they have a repo full of garbage that doesn’t work.

This was happening before this “AI” craze.

My new favourite is asking GitHub copilot (which I would not pay for out of my own pocket) why the code I’m writing isn’t working as intended and it asks me to show it the code that I already provided.

I do like not having copy and paste the same thing 5 times with slight variations (something it usually does pretty well until it doesn’t and I need a few minutes to find the error)

Yeah, in the time I describe the problem to the AI I could program it myself.

This is what it is called a programming language, it only exists to be able to tell the machine what to do in an unambiguous (in contrast to natural language) way.

Ugh I can’t find the xkcd about this where the guy goes, “you know what we call precisely written requirements? Code” or something like that

Not xkcd in this case

https://www.commitstrip.com/en/2016/08/25/a-very-comprehensive-and-precise-spec/

In my experience, you can’t expect it to deliver great working code, but it can always point you in the right direction.

There were some situations in which I just had no idea on how to do something, and it pointed me to the right library. The code itself was flawed, but with this information, I could use the library documentation and get it to work.